Publications

Showing 20 publications

From Motion to Localization: Cross-View Optimization with Stationary Event and RGB Cameras for Enhanced Pose Estimation

In

Accepted for publication at Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT). 2026

@article{directevs,

author = {Yukun Zhao and Xinyuan Song and Huajian Huang and Tristan Braud},

journal = {Accepted for publication at Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT)},

title = {From Motion to Localization: Cross-View Optimization with Stationary Event and RGB Cameras for Enhanced Pose Estimation },

year = {2026}

}

Applications such as Augmented Reality (AR) require accurate device positioning to minimize alignment errors. While visual positioning techniques offer high accuracy, their performance can degrade due to environmental changes like lighting variations and object movements. This paper introduces a new approach to visual positioning, relying on a stationary joint event/RGB sensing platform to track scene dynamics in real-time. This platform is at the core of a localization pipeline to predict the pose of user devices. First, a cross-modal object tracker matches dynamic objects between RGB and event images captured by the platform. These objects contribute to building a dynamic map, combined with the initial static 3D Structure from Motion (SfM) model to form a global feature map. Finally, a cross-view pose optimizer estimates pose uncertainties between modalities to refine and improve localization accuracy. To validate our approach, we collect a large-scale dataset over three scenes to account for typical AR scenarios where dynamics can affect the quality of visual positioning. We contribute this dataset to the community for future research on scene dynamics. Our approach shows significant improvement over existing methods, reducing translation and rotation errors by 12.9\% and 13.4\%, respectively, for weekly data over 4 weeks, and by 38.5\% and 16.2\% for monthly data over 4 months, compared to HLoc (SP+SG). It also reduces performance degradation by up to 50\% after only 4 weeks.

Visualizing and Sonifying Scenes of Potential Future Urbanism in Shenzhen's Sea World in “High Sections / Low Leaps”

In Proceedings of

Proceedings of the 18th International Symposium on Visual Information Communication and Interaction. 2025

@inproceedings{10.1145/3769534.3769591,

address = {New York, NY, USA},

articleno = {76},

author = {Sagesser, Marcel Zaes and Braud, Tristan and Lee, Gyuwon Sylvia and Wu, Zhen},

booktitle = {Proceedings of the 18th International Symposium on Visual Information Communication and Interaction},

doi = {10.1145/3769534.3769591},

isbn = {9798400718458},

keywords = {Urbanism, Speculative Futures, Creative Technologies, GenAI, Interactive Arts, Spatial Audio},

month = {Dec},

numpages = {2},

publisher = {Association for Computing Machinery},

series = {VINCI '25},

title = {Visualizing and Sonifying Scenes of Potential Future Urbanism in Shenzhen's Sea World in “High Sections / Low Leaps”},

url = {https://doi.org/10.1145/3769534.3769591},

year = {2025}

}

In Shenzhen’s Sea World in China’s Greater Bay Area, on reclaimed land, ocean-side promenades are set in front of impressive glass towers. Next to it lie container port, construction sites, and densely populated older areas. A rapidly built vision of Shenzhen’s future co-exists with traces from its various pasts. “High Sections / Low Leaps” is an audiovisual artwork that reconstructs actual and imagined development phases of China’s Greater Bay Area, one of the fastest developing areas on the planet. This artwork element, with the subtitle “Shenzhen’s Sea World Ocean Mall,” is an interactive scene rendered in a game engine, presented to the audience on two screens, showing otherworldly office towers that grow and shrink. 3D modeling, AI-generated facade elements, and spatial audio are employed to create dynamic audiovisual cityscape speculations. A camera tracks the number of spectators in proximity and switches the scene from past to future phases, allowing for subtle control over the work’s temporality. The artwork investigates how rapid urban development, such as in the Greater Bay Area, can be rendered perceptible for a public, fostering reflection on the relationship between new technologies (including GenAI) and imagined (and built) urban futures. At the intersection of computer graphics, data visualization, urbanism, creative technologies, spatial audio, and generative AI, this approach argues for a reflection on the consequences of human-technological collaboration in view of desired humane rather than technological outcomes. The pilot city Shenzhen holds significance for cities globally.

When the Observer Becomes the Observed

In

Accepted for publication at SIGGRAPH Asia 2025 Art Papers. 2025

@incollection{wu2024observer,

author = {Zhen Wu and Xiaomin Fan and Mika Shirahama and Tristan Braud},

booktitle = {Accepted for publication at SIGGRAPH Asia 2025 Art Papers},

month = {dec},

title = {When the Observer Becomes the Observed},

year = {2025}

}

Traditional sensors function as exact measuring instruments to represent the physical world. Conversely, humans are subjective, inaccurate sensors, whose measured quantities are often influenced by their perception of the environment. In this project, we speculate on the possibility of a sensing system where the human being becomes the sensor. We observe the human sensor through their movement, as a performative expression of the measure. We explore this concept through site-specific embodied practices, gathering movement and textual expressions related to environmental sensing from professional choreographers. This data is then utilized to fine-tune a large language model that generates abstract words based on the input movements. This language model is integrated into a system as a critical alternative to the technology of sensing, where the process of sensing turns into reflecting the observer's subjective body expression rather than providing a 1:1 mapping of the phenomenon.

PLANA3R: Zero-shot Metric Planar 3D Reconstruction via Feed-Forward Planar Splatting

In Proceedings of

Accepted for publication at 39th Conference on Neural Information Processing Systems (NeurIPS 2025). 2025

@inproceedings{liu2025plana3rzeroshotmetricplanar,

author = {Changkun Liu and Bin Tan and Zeran Ke and Shangzhan Zhang and Jiachen Liu and Ming Qian and Nan Xue and Yujun Shen and Tristan Braud},

booktitle = {Accepted for publication at 39th Conference on Neural Information Processing Systems (NeurIPS 2025)},

month = {Dec},

title = {PLANA3R: Zero-shot Metric Planar 3D Reconstruction via Feed-Forward Planar Splatting},

year = {2025}

}

This paper addresses metric 3D reconstruction of indoor scenes by exploiting their inherent geometric regularities with compact representations. Using planar 3D primitives -- a well-suited representation for man-made environments -- we introduce PLANA3R, a pose-free framework for metric 3D reconstruction from unposed two-view images. Our approach employs Vision Transformers to extract a set of sparse planar primitives, estimate relative camera poses, and supervise geometry learning via planar splatting, where gradients are propagated through high-resolution rendered depth and normal maps of primitives. Unlike prior feedforward methods that require 3D plane annotations during training, PLANA3R learns planar 3D structures without explicit plane supervision, enabling scalable training on large-scale stereo datasets using only depth and normal annotations. We validate PLANA3R on multiple indoor-scene datasets with metric supervision and demonstrate strong generalization to out-of-domain indoor environments across diverse tasks under metric evaluation protocols, including 3D surface reconstruction, depth estimation, and relative pose estimation. Furthermore, by formulating with planar 3D representation, our method emerges with the ability for accurate plane segmentation.

Toward AI-driven UI transition intuitiveness inspection for smartphone apps

In

International Journal of Human-Computer Studies. 2025

@article{HU2025103661,

author = {Xiaozhu Hu and Xiaoyu Mo and Xiaofu Jin and Yuan Chai and Yongquan Hu and Mingming Fan and Tristan Braud},

doi = {https://doi.org/10.1016/j.ijhcs.2025.103661},

issn = {1071-5819},

journal = {International Journal of Human-Computer Studies},

keywords = {UI transition intuitiveness, AI-driven user simulation, Design inspection},

month = {Nov},

pages = {103661},

title = {Toward AI-driven UI transition intuitiveness inspection for smartphone apps},

url = {https://www.sciencedirect.com/science/article/pii/S1071581925002186},

volume = {206},

year = {2025}

}

Participant-involved formative evaluations is necessary to ensure the intuitiveness of UI transition in mobile apps, but they are neither scalable nor immediate. Recent advances in AI-driven user simulation show promise, but they have not specifically targeted this scenario. This work introduces UTP (UI Transition Predictor), a tool designed to facilitate formative evaluations of UI transitions through two key user simulation models: 1. Predicting and explaining potential user uncertainty during navigation. 2. Predicting the UI element users would most likely select to transition between screens and explaining the corresponding reasons. These models are built on a human-annotated dataset of UI transitions, comprising 140 UI screen pairs and encompassing both high-fidelity and low-fidelity counterparts of UI screen pairs. Technical evaluation indicates that the models outperform GPT-4o in predicting user uncertainty and achieve comparable performance in predicting users’ selection of UI elements for transitions using a lighter, open-weight model. The tool has been validated to support the rapid screening of design flaws, and the confirmation of UI transitions appears to be intuitive.

HK-GenSpeech: A Generative AI Scene Creation Framework for Speech Based Cognitive Assessment

In Proceedings of

Proceedings of the 2025 Conference of the International Speech Communication Association (INTERSPEECH 2025). 2025

@inproceedings{yong2025hkgenspeech,

author = {Yong, Vi Jun Sean and Kumyol, Serkan and Low, Pau Le Lisa and Leung, Suk Wai Winnie and Braud, Tristan},

booktitle = {Proceedings of the 2025 Conference of the International Speech Communication Association (INTERSPEECH 2025)},

month = {aug},

title = {{HK-GenSpeech}: A Generative AI Scene Creation Framework for Speech Based Cognitive Assessment},

year = {2025}

}

Current methods of automated speech-based cognitive assessment often rely on fixed-picture descriptions in major languages, limiting repeatability, engagement, and locality. This paper introduces HK-GenSpeech (HKGS), a framework using generative AI to create pictures that present similar features to those used in cognitive assessment, augmented with descriptors reflecting the local context. We demonstrate HKGS through a dataset of 423 Cantonese speech samples collected in Hong Kong from 141 participants, with HK-MoCA scores ranging from 11 to 30. Each participant described the cookie theft picture, an HKGS fixed image, and an HKGS dynamic image. Regression experiments show comparable accuracy for all image types, indicating HKGS' adequacy in generating relevant assessment images. Lexical analysis further suggests that HKGS images elicit richer speech. By mitigating learning effects and improving engagement, HKGS supports broader data collection, particularly in low-resource settings.

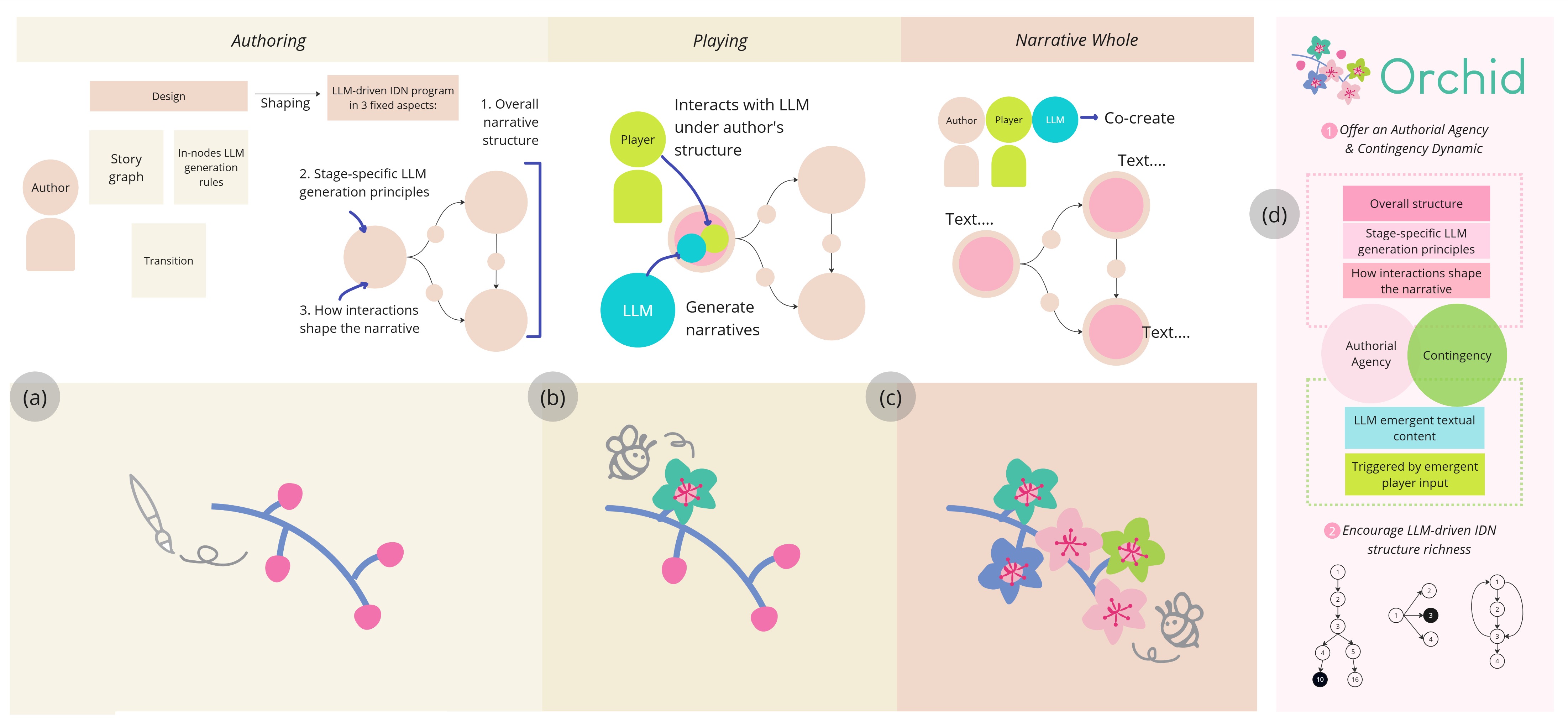

Orchid: A Creative Approach for Authoring LLM-Driven Interactive Narratives

In

Creativity and Cognition (C&C '25), June 23--25, 2025, Virtual, United Kingdom. 2025

@article{wu2025orchid,

author = {Zhen Wu and Serkan Kumyol and Shing Yin Wong and Xiaozhu Hu and Xin Tong and Tristan Braud},

booktitle = {Creativity and Cognition (C&C '25), June 23--25, 2025, Virtual, United Kingdom},

month = {jun},

title = {Orchid: A Creative Approach for Authoring LLM-Driven Interactive Narratives},

year = {2025}

}

Integrating Large Language Models (LLMs) into Interactive Digital Narratives (IDNs) enables dynamic storytelling where user interactions shape the narrative in real time, challenging traditional authoring methods. This paper presents the design study of Orchid, a creative approach for authoring LLM-driven IDNs. Orchid allows users to structure the hierarchy of narrative stages and define the rules governing LLM narrative generation and transitions between stages. The development of Orchid consists of three phases. 1) Formulating Orchid through desk research on existing IDN practices. 2) Implementation of a technology probe based on Orchid. 3) Evaluating how IDN authors use Orchid to design IDNs, verify Orchid's hypotheses, and explore user needs for future authoring tools. This study confirms that authors are open to LLM-driven IDNs but desire strong authorial agency in narrative structures, highlighted in accuracy in branching transitions and story details. Future design implications for Orchid include introducing deterministic variable handling, support for trans-media applications, and narrative consistency across branches.

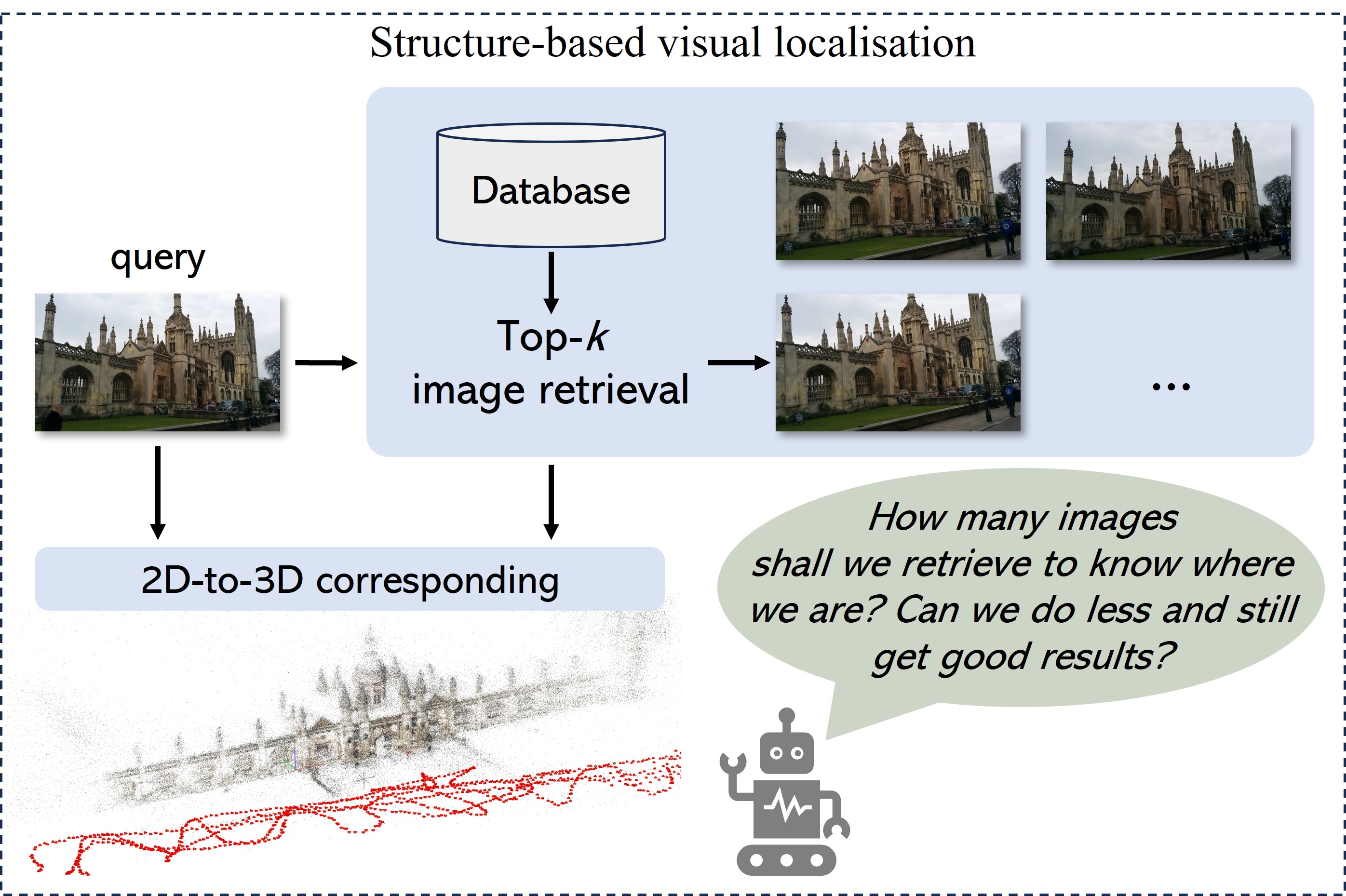

AIR-HLoc: Adaptive Image Retrieval for Efficient Visual Localisation

In Proceedings of

2025 IEEE International Conference on Robotics and Automation (ICRA). 2025

@inproceedings{liu2024air,

author = {Changkun Liu and Jianhao Jiao and Huajian Huang and Zhengyang Ma and Dimitrios Kanoulas and Tristan Braud},

booktitle = {2025 IEEE International Conference on Robotics and Automation (ICRA)},

month = {may},

title = {AIR-HLoc: Adaptive Image Retrieval for Efficient Visual Localisation},

year = {2025}

}

State-of-the-art hierarchical localisation pipelines (HLoc) employ image retrieval (IR) to establish 2D-3D correspondences by selecting the top-k most similar images from a reference database. While increasing k improves localisation robustness, it also linearly increases computational cost and runtime, creating a significant bottleneck. This paper investigates the relationship between global and local descriptors, showing that greater similarity between the global descriptors of query and database images increases the proportion of feature matches. Low similarity queries significantly benefit from increasing k, while high similarity queries rapidly experience diminishing returns. Building on these observations, we propose an adaptive strategy that adjusts k based on the similarity between the query's global descriptor and those in the database, effectively mitigating the feature-matching bottleneck. Our approach optimizes processing time without sacrificing accuracy. Experiments on three indoor and outdoor datasets show that AIR-HLoc reduces feature matching time by up to 30\%, while preserving state-of-the-art accuracy. The results demonstrate that AIR-HLoc facilitates a latency-sensitive localisation system.

LiteVLoc: Map-Lite Visual Localization for Image Goal Navigation

In Proceedings of

2025 IEEE International Conference on Robotics and Automation (ICRA). 2025

@inproceedings{jiao2024litevloc,

author = {Jianhao Jiao and Jinhao He and Changkun Liu and Sebastian Aegidius and Xiangcheng Hu and Tristan Braud and Dimitrios Kanoulas},

booktitle = {2025 IEEE International Conference on Robotics and Automation (ICRA)},

month = {may},

title = {LiteVLoc: Map-Lite Visual Localization for Image Goal Navigation},

year = {2025}

}

This paper presents LiteVLoc, a hierarchical visual localization framework that uses a lightweight topo-metric map to represent the environment. The method consists of three sequential modules that estimate camera poses in a coarse-to-fine manner. Unlike mainstream approaches relying on detailed 3D representations, LiteVLoc reduces storage overhead by leveraging learning-based feature matching and geometric solvers for metric pose estimation. A novel dataset for the map-free relocalization task is also introduced. Extensive experiments including localization and navigation in both simulated and real-world scenarios have validate the system's performance and demonstrated its precision and efficiency for large-scale deployment. Code and data will be made publicly available.

GS-CPR: Efficient Camera Pose Refinement via 3D Gaussian Splatting

In Proceedings of

The Thirteenth International Conference on Learning Representations (ICLR). 2025

@inproceedings{liu2025gscpr,

author = {Changkun Liu and Shuai Chen and Yash Sanjay Bhalgat and Siyan HU and Ming Cheng and Zirui Wang and Victor Adrian Prisacariu and Tristan Braud},

booktitle = {The Thirteenth International Conference on Learning Representations (ICLR)},

month = {apr},

title = {{GS}-{CPR}: Efficient Camera Pose Refinement via 3D Gaussian Splatting},

url = {https://openreview.net/forum?id=mP7uV59iJM},

year = {2025}

}

We leverage 3D Gaussian Splatting (3DGS) as a scene representation and propose a novel test-time camera pose refinement (CPR) framework, GS-CPR. This framework enhances the localization accuracy of state-of-the-art absolute pose regression and scene coordinate regression methods. The 3DGS model renders high-quality synthetic images and depth maps to facilitate the establishment of 2D-3D correspondences. GS-CPR obviates the need for training feature extractors or descriptors by operating directly on RGB images, utilizing the 3D foundation model, MASt3R, for precise 2D matching. To improve the robustness of our model in challenging outdoor environments, we incorporate an exposure-adaptive module within the 3DGS framework. Consequently, GS-CPR enables efficient one-shot pose refinement given a single RGB query and a coarse initial pose estimation. Our proposed approach surpasses leading NeRF-based optimization methods in both accuracy and runtime across indoor and outdoor visual localization benchmarks, achieving new state-of-the-art accuracy on two indoor datasets.

SC-OmniGS: Self-Calibrating Omnidirectional Gaussian Splatting

In Proceedings of

The Thirteenth International Conference on Learning Representations (ICLR). 2025

@inproceedings{huang2025scomnigs,

author = {Huajian Huang and Yingshu Chen and Longwei Li and Hui Cheng and Tristan Braud and Yajie Zhao and Sai-Kit Yeung},

booktitle = {The Thirteenth International Conference on Learning Representations (ICLR)},

month = {apr},

title = {{SC}-Omni{GS}: Self-Calibrating Omnidirectional Gaussian Splatting},

url = {https://openreview.net/forum?id=7idCpuEAiR},

year = {2025}

}

360-degree cameras streamline data collection for radiance field 3D reconstruction by capturing comprehensive scene data. However, traditional radiance field methods do not address the specific challenges inherent to 360-degree images. We present SC-OmniGS, a novel self-calibrating omnidirectional Gaussian splatting system for fast and accurate omnidirectional radiance field reconstruction using 360-degree images. Rather than converting 360-degree images to cube maps and performing perspective image calibration, we treat 360-degree images as a whole sphere and derive a mathematical framework that enables direct omnidirectional camera pose calibration accompanied by 3D Gaussians optimization. Furthermore, we introduce a differentiable omnidirectional camera model in order to rectify the distortion of real-world data for performance enhancement. Overall, the omnidirectional camera intrinsic model, extrinsic poses, and 3D Gaussians are jointly optimized by minimizing weighted spherical photometric loss. Extensive experiments have demonstrated that our proposed SC-OmniGS is able to recover a high-quality radiance field from noisy camera poses or even no pose prior in challenging scenarios characterized by wide baselines and non-object-centric configurations. The noticeable performance gain in the real-world dataset captured by consumer-grade omnidirectional cameras verifies the effectiveness of our general omnidirectional camera model in reducing the distortion of 360-degree images.

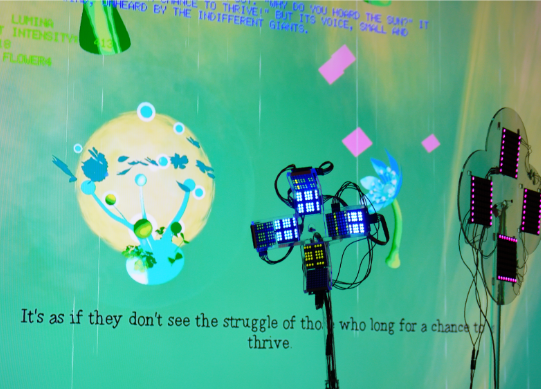

I Light U Up: Exploring a New Emergent Narrative Paradigm through Physical Data Participation in AI Generative Experiences

In

SIGGRAPH Asia 2024 Art Papers. 2024

@incollection{wu2024light,

author = {Zhen Wu and Ruoyu Wen and Marcel Zaes Sagesser and Sum Yi Ma and Jiashuo Xian and Wenda Wu and Tristan Braud},

booktitle = {SIGGRAPH Asia 2024 Art Papers},

month = {dec},

pages = {1--9},

title = {I Light U Up: Exploring a New Emergent Narrative Paradigm through Physical Data Participation in AI Generative Experiences},

year = {2024}

}

This paper introduces "I Light U Up", an interactive installation that explores the use of light as input for emergent narrative powered by LLM. Through an installation with light sensors, the audience uses light to communicate with the parallel digital beings, generating a narrative experience that emerges from the interaction. This work serves as a proof-of-concept, supporting a conceptual framework that aims to open new perspectives on the participation of physical data in emergent narrative experiences. It seeks to develop a new dimension in contrast to the text-centric one-way relationship between reader and story by including agency beyond human interactivity.

Nature: Metaphysics+ Metaphor (N: M+ M): Exploring Persistence, Feedback, and Visualisation in Mixed Reality Performance Arts.

In

SIGGRAPH Asia 2024 Art Papers. 2024

@incollection{braud2024nature,

author = {Tristan Braud and Brian Lau and Dominie Hoi Lam Chan and Chun Ming Wu and Zhen Wu and Vi Jun Sean Yong and Kirill Shatilov},

booktitle = {SIGGRAPH Asia 2024 Art Papers},

month = {dec},

pages = {1--7},

title = {Nature: Metaphysics+ Metaphor (N: M+ M): Exploring Persistence, Feedback, and Visualisation in Mixed Reality Performance Arts.},

year = {2024}

}

Nature: Metaphysics + Metaphor (N:M+M) is an art experience and exhibition that explores the intersection of Nature, Art, Man, and Technology. This immersive experience aims to bridge physical and digital, tangible and intangible, and ephemeral and persistent in mixed reality arts through new interaction and visualization methods. The performance was presented in front of an audience of 50 people, during which the artists constructed a physical-digital artwork that became part of a subsequent exhibition. This paper describes the rationale, creative approach, and technical contribution behind this work while reflecting on audience feedback and future directions.

SoundMorphTPU: Exploring Gesture Mapping in Deformable Interfaces for Music Interaction

In Proceedings of

Proceedings of the International Conference on New Interfaces for Musical Expression. 2024

@inproceedings{wu2024soundmorphtpu,

author = {Zhen Wu and Ze Gao and Hua Xu and Xingxing Yang and Tristan Braud},

booktitle = {Proceedings of the International Conference on New Interfaces for Musical Expression},

month = {sep},

pages = {395--406},

title = {SoundMorphTPU: Exploring Gesture Mapping in Deformable Interfaces for Music Interaction},

year = {2024}

}

Deformable interface is an emerging field with significant potential for use in computing applications, particularly in the design of Digital Music Instruments (DMIs). While prior works have investigated the design of gestural input for deformable interfaces and developed novel musical interactions, there remains a gap in understanding the tangible gestures as input and their corresponding output from the user's perspectives. This study explores the relationship between gestural input and the output of a deformable interface for multi-gestural music interaction. Following a pilot study to explore materials and their corresponding intuitive gestures with participants, we develop a TPU fabric interface as a probe to investigate this question in the context of musical interaction. Through user engagement with the probe as a sound control, we discovered that the input-output relationship between gestures and the sound can have meaningful implications for users' embodied interaction with the system. Our research deepens the understanding of designing deformable interfaces and their capacity to enhance embodied experiences in music interaction scenarios.

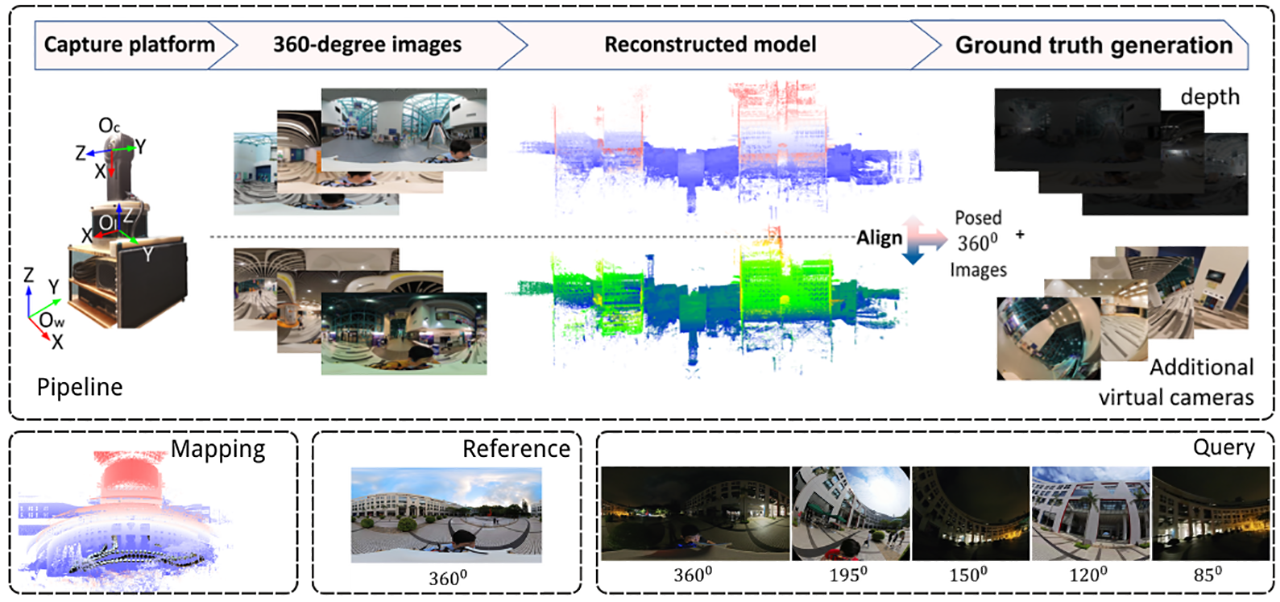

360Loc: A Dataset and Benchmark for Omnidirectional Visual Localization with Cross-device Queries

In Proceedings of

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2024

@inproceedings{huang2024360loc,

author = {Huajian Huang and Changkun Liu and Yipeng Zhu and Hui Cheng and Tristan Braud and Sai-Kit Yeung},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {jun},

pages = {22314--22324},

title = {360Loc: A Dataset and Benchmark for Omnidirectional Visual Localization with Cross-device Queries},

year = {2024}

}

Portable 360∘ cameras are becoming a cheap and efficient tool to establish large visual databases. By capturing omnidirectional views of a scene, these cameras could expedite building environment models that are essential for visual localization. However, such an advantage is often overlooked due to the lack of valuable datasets. This paper introduces a new benchmark dataset, 360Loc, composed of 360∘ images with ground truth poses for visual localization. We present a practical implementation of 360∘ mapping combining 360∘ images with lidar data to generate the ground truth 6DoF poses. 360Loc is the first dataset and benchmark that explores the challenge of cross-device visual positioning, involving 360∘ reference frames, and query frames from pinhole, ultra-wide FoV fisheye, and 360∘ cameras. We propose a virtual camera approach to generate lower-FoV query frames from 360∘ images, which ensures a fair comparison of performance among different query types in visual localization tasks. We also extend this virtual camera approach to feature matching-based and pose regression-based methods to alleviate the performance loss caused by the cross-device domain gap, and evaluate its effectiveness against state-of-the-art baselines. We demonstrate that omnidirectional visual localization is more robust in challenging large-scale scenes with symmetries and repetitive structures. These results provide new insights into 360-camera mapping and omnidirectional visual localization with cross-device queries.

Hr-apr: Apr-agnostic framework with uncertainty estimation and hierarchical refinement for camera relocalisation

In Proceedings of

2024 IEEE International Conference on Robotics and Automation (ICRA). 2024

@inproceedings{liu2024hr,

author = {Changkun Liu and Shuai Chen and Yukun Zhao and Huajian Huang and Victor Prisacariu and Tristan Braud},

booktitle = {2024 IEEE International Conference on Robotics and Automation (ICRA)},

month = {may},

organization = {IEEE},

pages = {8544--8550},

title = {Hr-apr: Apr-agnostic framework with uncertainty estimation and hierarchical refinement for camera relocalisation},

year = {2024}

}

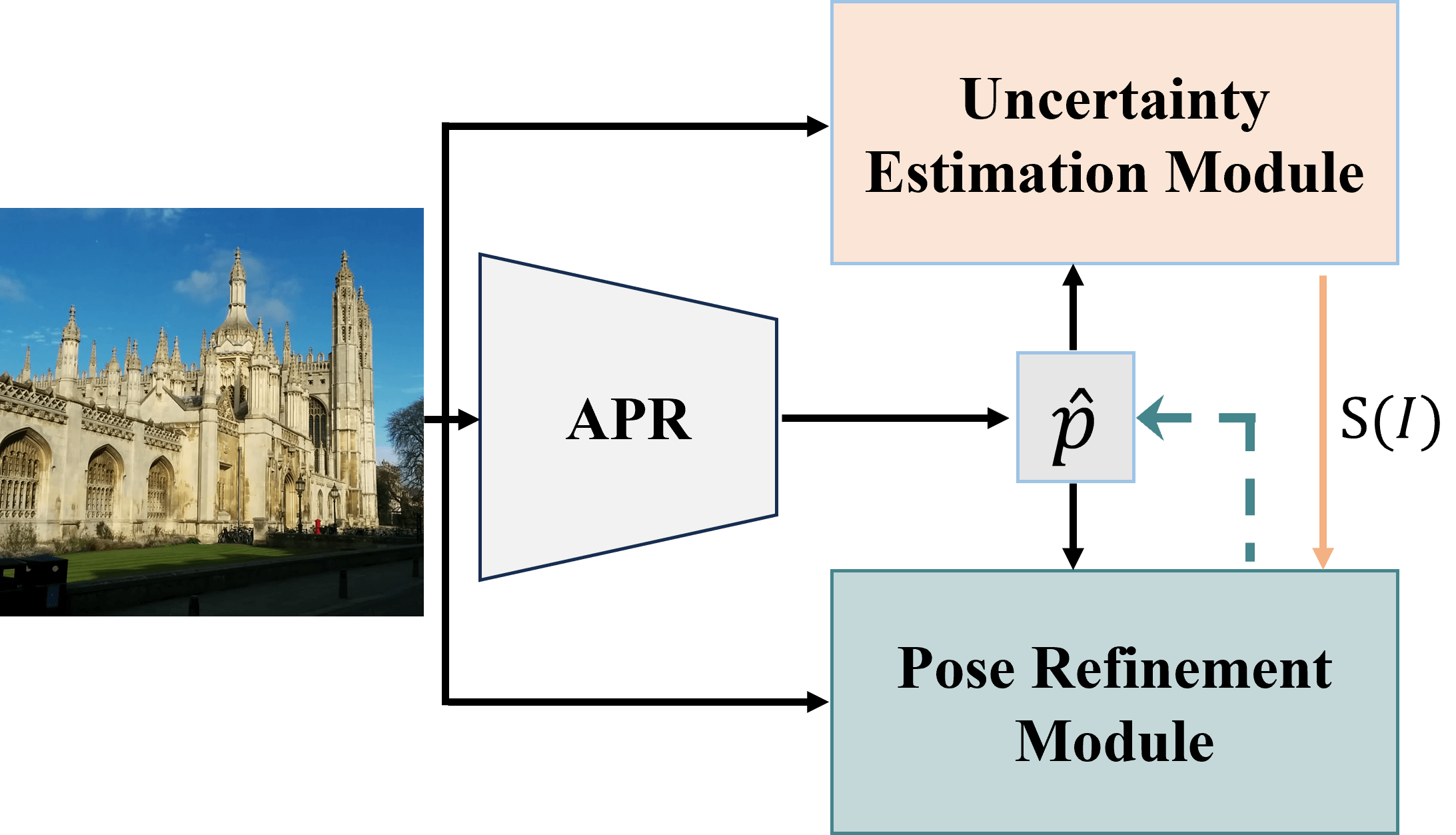

Absolute Pose Regressors (APRs) directly estimate camera poses from monocular images, but their accuracy is unstable for different queries. Uncertainty-aware APRs provide uncertainty information on the estimated pose, alleviating the impact of these unreliable predictions. However, existing uncertainty modelling techniques are often coupled with a specific APR architecture, resulting in suboptimal performance compared to state-of-the-art (SOTA) APR methods. This work introduces a novel APR-agnostic framework, HR-APR, that formulates uncertainty estimation as cosine similarity estimation between the query and database features. It does not rely on or affect APR network architecture, which is flexible and computationally efficient. In addition, we take advantage of the uncertainty for pose refinement to enhance the performance of APR. The extensive experiments demonstrate the effectiveness of our framework, reducing 27.4% and 15.2% of computational overhead on the 7Scenes and Cambridge Landmarks datasets while maintaining the SOTA accuracy in single-image APRs.

MARViN: Mobile AR Dataset with Visual-Inertial Data

In Proceedings of

2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW). 2024

@inproceedings{liu2024marvin,

author = {Changkun Liu and Yukun Zhao and Tristan Braud},

booktitle = {2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

month = {mar},

organization = {IEEE},

pages = {532--538},

title = {MARViN: Mobile AR Dataset with Visual-Inertial Data},

year = {2024}

}

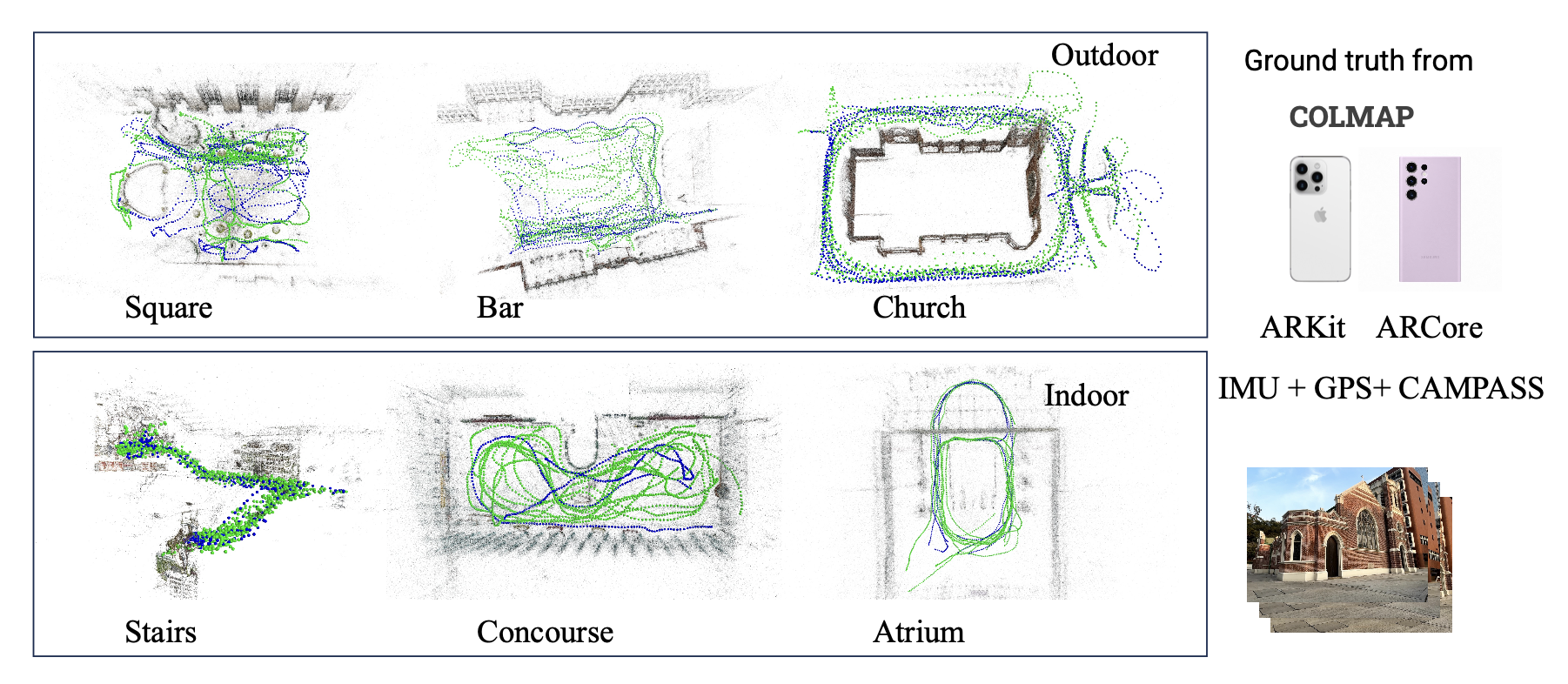

Accurate camera relocalisation is a fundamental technology for extended reality (XR), facilitating the seamless integration and persistence of digital content within the real world. Benchmark datasets that measure camera pose accuracy have driven progress in visual re-localisation research. Despite notable progress in this field, there is a limited availability of datasets incorporating Visual Inertial Odometry (VIO) data from typical mobile AR frameworks such as ARKit or ARCore. This paper presents a new dataset, MARViN, comprising diverse indoor and outdoor scenes captured using het- erogeneous mobile consumer devices. The dataset includes camera images, ARCore or ARKit VIO data, and raw sensor data for several mobile devices, together with the corresponding ground-truth poses. MARViN allows us to demonstrate the capability of ARKit and ARCore to provide relative pose estimates that closely approximate ground truth within a short timeframe. Subsequently, we evaluate the performance of mobile VIO data in enhancing absolute pose estimations in both desktop simulation and user study.

Mobilearloc: On-device robust absolute localisation for pervasive markerless mobile ar

In Proceedings of

2024 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops). 2024

@inproceedings{liu2024mobilearloc,

author = {Changkun Liu and Yukun Zhao and Tristan Braud},

booktitle = {2024 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops)},

month = {Mar},

organization = {IEEE},

pages = {544--549},

title = {Mobilearloc: On-device robust absolute localisation for pervasive markerless mobile ar},

year = {2024}

}

Recent years have seen significant improvement in absolute camera pose estimation, paving the way for pervasive markerless Augmented Reality (AR). However, accurate absolute pose estimation techniques are computation- and storage-heavy, requiring computation offloading. As such, AR systems rely on visual-inertial odometry (VIO) to track the device’s relative pose between requests to the server. However, VIO suffers from drift, requiring frequent absolute repositioning. This paper introduces MobileARLoc, a new framework for on-device large-scale markerless mobile AR that combines an absolute pose regressor (APR) with a local VIO tracking system. Absolute pose regressors (APRs) provide fast on-device pose estimation at the cost of reduced accuracy. To address APR accuracy and reduce VIO drift, MobileARLoc creates a feedback loop where VIO pose estimations refine the APR predictions. The VIO system identifies reliable predictions of APR, which are then used to compensate for the VIO drift. We comprehensively evaluate MobileARLoc through dataset simulations. MobileARLoc halves the error compared to the underlying APR and achieves fast (80ms) on-device inference speed.

Vr prem+: An immersive pre-learning branching visualization system for museum tours

In Proceedings of

Proceedings of the Eleventh International Symposium of Chinese CHI. 2023

@inproceedings{gao2023vr,

author = {Ze Gao and Xiang Li and Changkun Liu and Xian Wang and Anqi Wang and Liang Yang and Yuyang Wang and Pan Hui and Tristan Braud},

booktitle = {Proceedings of the Eleventh International Symposium of Chinese CHI},

month = {nov},

pages = {374--385},

title = {Vr prem+: An immersive pre-learning branching visualization system for museum tours},

year = {2023}

}

We present VR PreM+, an innovative VR system designed to enhance web exploration beyond traditional computer screens. Unlike static 2D displays, VR PreM+ leverages 3D environments to create an immersive pre-learning experience. Using keyword-based information retrieval allows users to manage and connect various content sources in a dynamic 3D space, improving communication and data comparison. We conducted preliminary and user studies that demonstrated efficient information retrieval, increased user engagement, and a greater sense of presence. These findings yielded three design guidelines for future VR information systems: display, interaction, and user-centric design. VR PreM+ bridges the gap between traditional web browsing and immersive VR, offering an interactive and comprehensive approach to information acquisition. It holds promise for research, education, and beyond.

Unlogical instrument: Material-driven gesture-controlled sound installation

In Proceedings of

Companion Publication of the 2023 ACM Designing Interactive Systems Conference (Art Gallery). 2023

@inproceedings{wu2023unlogical,

author = {Zhen Wu and Ze Gao and Tristan Braud},

booktitle = {Companion Publication of the 2023 ACM Designing Interactive Systems Conference (Art Gallery)},

month = {jul},

pages = {86--89},

title = {Unlogical instrument: Material-driven gesture-controlled sound installation},

year = {2023}

}

This proposal describes the design and demonstration of the Unlogical Instrument. This textile-based gesture-controlled sound interface invites the audience to generate sound by interacting with the textile using different gestures, such as poking, flicking, and patting. Unlogical is a statement about breaking the dogmatic approach of commonly used musical instruments. It brings resilience to the form of musical expression and interaction for the audience. It introduces an interactive mechanism mediated by intuitive human gestures and the curiosity to explore tangible material. Unlogical Instrument locates the focus on the textile. This artwork investigates the relationship between the gestures and the sound perception that originates from the textile through a material-driven approach. Inkjet printing is applied to transfer the original textile into a sensorial interface. The audience will listen to the sound while interacting with the textile surface, exploring and understanding this instrument through free-form playing.